Data Topics

- Data Architecture

- Data Literacy

- Data Science

- Data Strategy

- Data Modeling

- Governance & Quality

- Data Education

- Smart Data News, Articles, & Education

Complete Guide to Image Labeling for Machine Learning

Image labeling enables you to tag and identify specific details in an image. In computer vision, image labeling involves adding specific tags to raw data, including videos and images. Each tag represents a certain object class associated with this data. Supervised machine learning (ML) models utilize labels to learn to identify a certain object class within unclassified […]

Image labeling enables you to tag and identify specific details in an image. In computer vision, image labeling involves adding specific tags to raw data, including videos and images. Each tag represents a certain object class associated with this data.

Image annotation is a type of image labeling used to create datasets for computer vision models. You can split these datasets into training sets to train ML models and test or validate datasets before using them to evaluate model performance.

Data scientists and machine learning engineers employ these datasets to train and evaluate ML models. At the end of the training period, the model can automatically assign labels to unlabeled data.

Why Is Image Labeling Important for AI and Machine Learning?

Image labeling enables supervised machine learning models to achieve computer vision capabilities. Data scientists use image labeling to train ML models to:

- Label an entire image to learn its meaning

- Identify object classes within an image

Essentially, image labeling enables ML models to understand the content of images. Image labeling techniques and tools help ML models capture or highlight specific objects within each image, making images readable by machines. This capability is crucial for developing functional AI models and improving computer vision.

Image labeling and annotation enable object recognition in machines to improve computer vision accuracy. Using labels to train AI and ML helps the models learn to detect patterns. The models run through this process until they can recognize objects independently.

Types of Computer Vision Image Labeling

Image classification.

You can annotate data for image classification by adding a tag to an image. The number of unique tags in a database matches the number of classes the model can classify.

Here are the three key classification types:

- Binary class classification: Includes only two tags

- Multiclass classification: Includes multiple tags

- Multi-label classification: Each image can have more than one tag

Image Segmentation

Image segmentation involves using computer vision models to separate objects in an image from their backgrounds and other objects.

It usually requires creating a pixel map the same size as the image, using the number 1 to indicate the object is present and the number 0 to indicate no annotations exist.

Segmenting multiple objects in the same image involves concatenating pixel maps for each object channel-wise and using the maps as ground truth for the model.

Object Detection

Object detection involves using computer vision to identify objects and their specific locations. Unlike image classification, object detection processes annotate each object using bounding boxes.

A bounding box consists of the smallest rectangular segment containing an object in the image. Bounding box annotations are often accompanied by tags, providing each bounding box with a label in the image.

The coordinates of bounding boxes and associated tags are usually stored in a separate JSON file in a dictionary format. Typically, the image number or image ID is the dictionary’s key.

Pose Estimation

Pose estimation involves using computer vision models to estimate a person’s pose in an image. It works to detect key points in the human body and correlate them to estimate the pose, meaning the key points serve as the corresponding ground truth for pose estimation.

Pose estimation requires labeling simple coordinate data with tags. Each coordinate indicates the location of a certain key point, which is identified by a tag in the image.

Effective Image Labeling for Computer Vision Projects

The following best practices can help you perform more effective image selection and labeling for computer vision models:

- Include both machine learning and domain experts in initial image selection.

- Start with a small batch of images, annotate them, and get feedback from all stakeholder to prevent misunderstandings and understand exactly what images are needed.

- Consider what your model needs to detect, and ensure you have sufficient variation of appearance, lighting, and image capture angles.

- When detecting objects, ensure you select images of all common variations of the object – for example, if you are detecting cars, ensure you have images of different colors, manufacturers, angles, and lighting conditions.

- Go through the dataset at the beginning of the project, consider cases that are more difficult to classify, and come up with consistent strategies to deal with them. Ensure you document and communicate your decisions clearly to the entire team.

- Consider factors that will make it more difficult for your model to detect an object, such as occlusion or poor visibility. Decide whether to exclude these images, or purposely include them to ensure your model can train on real-world conditions.

- Pay attention to quality, perform rigorous QA, and prefer to have more than one data labeler work on each image, so they can verify each other’s work. Mismatched labels can negatively affect data quality and will hurt the model’s performance.

- As a general rule, exclude images that are not sharp enough, or do not have enough visual information. But take into account that the model will not be able to work with these types of images in real life.

- Use existing datasets – these typically contain millions of images and dozens or hundreds of different categories. Two common examples are ImageNet and COCO.

- Use transfer learning techniques to leverage visual knowledge from similar, pre-trained models and use it for your own models.

In this article, I explained why image labeling is critical for machine learning models related to computer vision. I discussed the important types of image labeling – image classification, image segmentation, object detection, and pose estimation.

Finally, I provided some best practices that can help you make image labeling more effective, including:

- Include experts in initial image selection

- Start with a small batch of images

- Ensure you capture all common variations of the object

- Consider edge cases and how to deal with them

- Consider factors like occlusion or poor visibility

- Pay attention to quality

- Use existing datasets if possible and use transfer learning to leverage knowledge from similar, pre-trained models for your own models.

I hope this will be useful as you advance your use of image labeling for machine learning.

Leave a Reply Cancel reply

You must be logged in to post a comment.

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Image Labeling by Assignment

Journal of Mathematical Imaging and Vision

Related Papers

Stefania PETRA

We introduce a novel geometric approach to the image labeling problem. A general objective function is defined on a manifold of stochastic matrices, whose elements assign prior data that are given in any metric space, to observed image measurements. The corresponding Riemannian gradient flow entails a set of replicator equations, one for each data point, that are spatially coupled by geometric averaging on the manifold. Starting from uniform assignments at the barycenter as natural initialization, the flow terminates at some global maximum, each of which corresponds to an image labeling that uniquely assigns the prior data. No tuning parameters are involved, except for two parameters setting the spatial scale of geometric averaging and scaling globally the numerical range of features, respectively. Our geometric variational approach can be implemented with sparse interior-point numerics in terms of parallel multiplicative updates that converge efficiently.

We study the inverse problem of model parameter learning for pixelwise image labeling, using the linear assignment flow and training data with ground truth. This is accomplished by a Riemannian gradient flow on the manifold of parameters that determines the regularization properties of the assignment flow. Using the symplectic partitioned Runge–Kutta method for numerical integration, it is shown that deriving the sensitivity conditions of the parameter learning problem and its discretization commute. A convenient property of our approach is that learning is based on exact inference. Carefully designed experiments demonstrate the performance of our approach, the expressiveness of the mathematical model as well as its limitations, from the viewpoint of statistical learning and optimal control.

We introduce a novel algorithm for estimating optimal parameters of linearized assignment flows for image labeling. An exact formula is derived for the parameter gradient of any loss function that is constrained by the linear system of ODEs determining the linearized assignment flow. We show how to efficiently evaluate this formula using a Krylov subspace and a low-rank approximation. This enables us to perform parameter learning by Riemannian gradient descent in the parameter space, without the need to backpropagate errors or to solve an adjoint equation, in less than 10 seconds for a 512 × 512 image using just about 0.5 GB memory. Experiments demonstrate that our method performs as good as highly-tuned machine learning software using automatic differentiation. Unlike methods employing automatic differentiation, our approach yields a lowdimensional representation of internal parameters and their dynamics which helps to understand how networks work and perform that realize assignmen...

Lecture Notes in Computer Science

We introduce and study the unsupervised self-assignment flow for labeling image data (euclidean or manifold-valued) without specifying any class prototypes (labels) beforehand, and without alternating between data assignment and prototype evolution, which is common in unsupervised learning. Rather, a single smooth flow evolving on an elementary statistical manifold is geometrically integrated which assigns given data to itself. Specifying the scale of spatial regularization by geometric averaging suffices to induce a low-rank data representation, the emergence of prototypes together with their number, and the data labeling. Connections to the literature on low-rank matrix factorization and on data representations based on discrete optimal mass transport are discussed.

Reza Babanezhad

We consider the stochastic optimization of finite sums where the functions are smooth and convex on a Riemannian manifold. We present MASAGA, an extension of SAGA on Riemannian manifolds. SAGA is a variance reduction technique that often performs faster in practice compared to methods that rely on updating the expensive full gradient frequently such as SVRG. However, SAGA typically requires extra memory proportional to the size of the dataset to store stale gradients. This memory footprint can be reduced when the gradient vectors have a structure such as sparsity. We show that MASAGA achieves a linear convergence rate when the objective function is smooth, convex, and lies on a Riemannian manifold. Furthermore, we show that MASAGA achieves faster convergence rate with non-uniform sampling than the uniform sampling. Our experiments show that MASAGA is faster than the RSGD algorithm for finding the leading eigenvector corresponding to the maximum eigenvalue.

Proceedings / CVPR, IEEE Computer Society Conference on Computer Vision and Pattern Recognition. IEEE Computer Society Conference on Computer Vision and Pattern Recognition

In this paper, we propose a novel algorithm for computing an atlas from a collection of images. In the literature, atlases have almost always been computed as some types of means such as the straightforward Euclidean means or the more general Karcher means on Riemannian manifolds. In the context of images, the paper's main contribution is a geometric framework for computing image atlases through a two-step process: the localization of mean and the realization of it as an image. In the localization step, a few nearest neighbors of the mean among the input images are determined, and the realization step then proceeds to reconstruct the atlas image using these neighbors. Decoupling the localization step from the realization step provides the flexibility that allows us to formulate a general algorithm for computing image atlas. More specifically, we assume the input images belong to some smooth manifold M modulo image rotations. We use a graph structure to represent the manifold, an...

We present a smooth geometric approach to discrete tomography that jointly performs tomographic reconstruction and label assignment. The flow evolves on a submanifold equipped with a Hessian Riemannian metric and properly takes into account given projection constraints. The metric naturally extends the Fisher-Rao metric from labeling problems with directly observed data to the inverse problem of discrete tomography where projection data only is available. The flow simultaneously performs reconstruction and label assignment. We show that it can be numerically integrated by an implicit scheme based on a Bregman proximal point iteration. A numerical evaluation on standard test-datasets in the few angles scenario demonstrates an improvement of the reconstruction quality compared to competitive methods.

Hans Knutsson

We study optimization problems over Riemannian manifolds using Stochastic Derivative-Free Optimization (SDFO) algorithms. Riemannian adaptations of SDFO in the literature use search information generated within the normal neighbourhoods around search iterates. While this is natural due to the local Euclidean property of Riemannian manifolds, the search information remains local. We address this restriction using only the intrinsic geometry of Riemannian manifolds. In particular, we construct an evolving sampling mixture distribution for generating non-local search populations on the manifold. This is done within a consistent mathematical framework using the Riemannian geometry of the search space and the geometry of mixture distributions over Riemannian manifolds. We first propose a generalized framework for adapting SDFO algorithms on Euclidean spaces to Riemannian manifolds, which encompasses known methods such as Riemannian Covariance Matrix Adaptation Evolutioanry Stragegies (Ri...

Loading Preview

Sorry, preview is currently unavailable. You can download the paper by clicking the button above.

RELATED PAPERS

IEEE Transactions on Image Processing

Daniel Kressner

Dmitry Nowicki

Journal of Computational and Graphical Statistics

Richard Gerlach

Numerical Linear Algebra with Applications

Ding-Xuan Zhou

Mathematical Methods of Statistics

Boris Levit

Zulfiqar Hasan Khan

2017 30th SIBGRAPI Conference on Graphics, Patterns and Images Tutorials (SIBGRAPI-T)

Carlos E Thomaz

Marc Pollefeys

Antonio Robles-Kelly

IEEE/CAA Journal of Automatica Sinica

IEEE/CAA J. Autom. Sinica

Serge Belongie

Pattern Recognition

Edwin Hancock

jorge zubelli

Medical Image Analysis

Ragini Verma

IMA Journal of Applied Mathematics

Ronald Coifman

arXiv (Cornell University)

Xingjian Zhen

Stefan Schlager

Barak Sober

2007 International Joint Conference on Neural Networks

Jorma Laaksonen

Information processing in medical imaging : proceedings of the ... conference

Shuqing Chen

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

ConfLabeling: Assisting Image Labeling with User and System Confidence

- Conference paper

- First Online: 25 November 2022

- Cite this conference paper

- Chia-Ming Chang 11 &

- Takeo Igarashi 11

Part of the book series: Lecture Notes in Computer Science ((LNCS,volume 13518))

Included in the following conference series:

- International Conference on Human-Computer Interaction

1779 Accesses

1 Citations

Interactive labeling supports manual image labeling by presenting system predictions for users to fix errors. However, existing labeling methods do not effectively consider image difficulty, which may affect system predictions and user labeling. We introduce ConfLabeling , a confidence-based labeling interface that represents image difficulties as user and system confidence. This interface allows users to give a confidence score to each label assignment (user confidence), and our system visualizes the results of predictions with confidence levels (system confidence). We expect user confidence to improve system prediction, and system confidence would help users quickly and correctly identify the images that need to be inspected. We conducted a user study to compare our proposed confidence-based interface with a conventional non-confidence interface in an interactive image labeling task of varying difficulty. The results indicate that the proposed confidence-based interface achieved higher classification accuracy than a non-confidence interface when the image was not too difficult.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Similar content being viewed by others

Adaptive image annotation: refining labels according to contents and relations

Iterative Active Classification of Large Image Collection

A Hierarchical-Based Web-Platform for Crowdsourcing Distinguishable Image Patches

Russakovsky, O., et al.: ImageNet large scale visual recognition challenge. Int. J. Comput. Vision 115 (3), 211–252 (2015)

Article MathSciNet Google Scholar

Karpathy, A.: What I learned from competing against a convnet on imagenet (2014). http://karpathy.github.io/2014/09/02/what-i-learned-from-competing-against-a-convnet-on-imagenet/

Gong, C., Tao, D., Maybank, S.J., Liu, W., Kang, G., Yang, J.: Multi-modal curriculum learning for semi-supervised image classification. IEEE Trans. Image Process. 25 (7), 3249–3260 (2016). https://doi.org/10.1109/TIP.2016.2563981

Article MathSciNet MATH Google Scholar

Wang, J., et al.: Learning fine-grained image similarity with deep ranking. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 13861393 (2014). https://doi.org/10.1109/CVPR.2014.180

Fan, L., Zhao, H., Zhao, H., Liu, P.-P., Huangshui, H.: Image retrieval based on learning to rank and multiple loss. ISPRS Int. J. Geo Inf. 8 , 393 (2019)

Article Google Scholar

Settles, B.: Active Learning Literature Survey. Computer Sciences Technical Report1648, University of Wisconsin–Madison (2009)

Google Scholar

desJardins, M., MacGlashan, J., Ferraioli, J.: Interactive visual clustering. In: Proceedings of the 12th International Conference on Intelligent User Inter-faces, IUI ’07, pp. 361–364, New York, NY, USA. Association for Computing Machinery (2007)

Hemmer, P., Kühl, N., Schӧffer, J.: Deal: Deep evidential active learning for image classification. In: 2020 19th IEEE International Conference on Machine Learning and Applications (ICMLA), pp. 865–870 (2020)

Wang, K., Zhang, D., Li, Y., Zhang, R., Lin, L.: Cost-effective active learning for deep image classification. IEEE Trans. Circuits Syst. Video Technol. 27 , 2591–2600 (2017)

Heimerl, F., Koch, S., Bosch, H., Ertl, T.: Visual classifier training for text document retrieval. IEEE Trans. Visual Comput. Graphics 18 , 2839–2848 (2012)

Hӧferlin, B., Netzel, R., Hӧferlin, M., Weiskopf, D., Heidemann, G.: Interactive learning of ad-hoc classifiers for video visual analytics. In: IEEE Conference on Visual Analytics Science and Technology (VAST), pp. 23–32 (2012)

von Ahn, L., Dabbish, : Labeling images with a computer game. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI’04, pp. 319–326, New York, NY, USA (2004)

Chang, C.-M., Lee, C.-H., Igarashi, T.: Spatial labeling: leveraging spatial layout for improving label quality in non-expert image annotation. In: CHI Conference on Human Factors in Computing Systems (CHI ’21), May 8–13, 2021, Yokohama, Japan. ACM, New York, NY, USA (2021). https://doi.org/10.1145/3411764.3445165

Wang, M., Ji, D., Tian, Q., Hua, X.-S.: Intelligent photo clustering with user interaction and distance metric learning. Pattern Recogn. Lett. 33 (4), 462–470 (2012)

Bruneau, P., Otjacques, B.: An interactive, example-based, visual clus-tering system. In: 2013 17th International Conference on Information Visualisation, pp. 168–173 (2013)

Jose, G.S., Paiva, W.R., Schwartz, H.P., Minghim, R.: An approach to supporting incremental visual data classification. IEEE Trans. Visual Comput. Graphics 21 (1), 4–17 (2015)

Ishida, T., Niu, G., Sugiyama, M.: Binary classification from positive-confidence data. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems, NIPS’18, page 5921–5932, Red Hook, NY, USA,2018. Curran Associates Inc. (2018)

Zhang, X., Zhu, X., Wright, S.: Training set debugging using trusted items. In: Thirty-Second AAAI Conference on Artificial Intelligence (2018)

Oyama, S., Baba, Y., Sakurai, Y., Kashima, H.: Accurate in-tegration of crowdsourced labels using workers’ self-reported confidence scores. In: Proceedings of the Twenty-Third International Joint Conference on Artificial Intelligence, IJCAI ’13, pp. 2554–2560. AAAI Press (2013)

Song, J., Wang, H., Gao, Y., An, B.: Active learning with confidence-basedanswers for crowdsourcing labeling tasks. Knowl.-Based Syst. 159 , 244–258 (2018)

Chiang, C.-C.: Interactive tool for image annotation using a semi-supervised and hierarchical approach. Computer Standards & Interfaces 35(1), 50–58 (2013)

Lai, H.P., Visani, M., Boucher, A., Ogier, J.-M.: A new inter-active semi-supervised clustering model for large image database indexing. Pattern Recogn. Lett. 37 , 94–106 (2014)

Xiang, S., et al.: Interactive correction of mislabeled training data. In: 2019 IEEE Conference on Visual Analytics Science and Technology (VAST), pp. 57–68 (2019)

Liu, S., Chen, C., Lu, Y.F., Ouyang, F., Wang, B.: An interactive method to improve crowdsourced annotations. IEEE Transactions on Visualization and Computer Graphics 25 (1), 235–245 (2019)

Maaten, L.V.D., Hinton, G.E.: Visualizing data using t-sne. J. Mach. Learn. Res. 9 , 2579–2605 (2008)

MATH Google Scholar

sklearn.ensemble.randomforestclassifier. https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html

Robert (Munro) Monarch. Uncertainty sampling cheatsheet (2019). https://towardsdatascience.com/uncertainty-sampling-cheatsheet-ec57bc067c0b

Chang, C.-M., Mishra, S.D., Igarashi, T.: A hierarchical task assignment for manual image labeling. In: 2019 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC), pp. 139–143 (2019). http://dx.doi.org/ https://doi.org/10.1109/VLHCC.2019.8818828

Xiao, H., Rasul, K., Vollgraf, R.: Fashion-Mnist: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. ArXiv, abs/1708.07747 (2017)

Clanuwat, T., Bober-Irizar, M., Kitamoto, A., Lamb, A., Yamamoto, K., Ha, D.: Deep Learning for Classical Japanese Literature. ArXiv, abs/1812.01718 (2018)

Chang, C.-M., He, Y., Yang, X., Xie, H., Igarashi, T.: DualLabel: secondary labels for challenging image annotation. In: The 48th International Conference on Graphics Interface and Human-Computer Interaction (Gl 2022), Montreal, QC, Canada, 17–19 May 2022 (2022)

Download references

Acknowledgements

This work was supported by JST CREST Grant Number JP- MJCR17A1, Japan.

Author information

Authors and affiliations.

The University of Tokyo, Tokyo, Japan

Yi Lu, Chia-Ming Chang & Takeo Igarashi

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Chia-Ming Chang .

Editor information

Editors and affiliations.

U.S. Army Research Laboratory, Adelphi, MD, USA

Jessie Y. C. Chen

U.S. Army Combat Capabilities Development Command Soldier Center, Orlando, FL, USA

Gino Fragomeni

Siemens (United States), Princeton, NJ, USA

Helmut Degen

Institute of Computer Science, Foundation for Research and Technology – Hellas (FORTH), Heraklion, Crete, Greece

Stavroula Ntoa

Rights and permissions

Reprints and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper.

Lu, Y., Chang, CM., Igarashi, T. (2022). ConfLabeling: Assisting Image Labeling with User and System Confidence. In: Chen, J.Y.C., Fragomeni, G., Degen, H., Ntoa, S. (eds) HCI International 2022 – Late Breaking Papers: Interacting with eXtended Reality and Artificial Intelligence. HCII 2022. Lecture Notes in Computer Science, vol 13518. Springer, Cham. https://doi.org/10.1007/978-3-031-21707-4_26

Download citation

DOI : https://doi.org/10.1007/978-3-031-21707-4_26

Published : 25 November 2022

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-21706-7

Online ISBN : 978-3-031-21707-4

eBook Packages : Computer Science Computer Science (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Help | Advanced Search

Computer Science > Computer Vision and Pattern Recognition

Title: image labeling by assignment.

Abstract: We introduce a novel geometric approach to the image labeling problem. Abstracting from specific labeling applications, a general objective function is defined on a manifold of stochastic matrices, whose elements assign prior data that are given in any metric space, to observed image measurements. The corresponding Riemannian gradient flow entails a set of replicator equations, one for each data point, that are spatially coupled by geometric averaging on the manifold. Starting from uniform assignments at the barycenter as natural initialization, the flow terminates at some global maximum, each of which corresponds to an image labeling that uniquely assigns the prior data. Our geometric variational approach constitutes a smooth non-convex inner approximation of the general image labeling problem, implemented with sparse interior-point numerics in terms of parallel multiplicative updates that converge efficiently.

| Subjects: | Computer Vision and Pattern Recognition (cs.CV); Optimization and Control (math.OC) |

| classes: | 62H35, 65K05, 68U10, 62M40 |

| Cite as: | [cs.CV] |

| (or [cs.CV] for this version) | |

| Focus to learn more arXiv-issued DOI via DataCite | |

| : | Focus to learn more DOI(s) linking to related resources |

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

DBLP - CS Bibliography

Bibtex formatted citation.

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

- For educators

- English (US)

- English (India)

- English (UK)

- Greek Alphabet

This problem has been solved!

You'll get a detailed solution from a subject matter expert that helps you learn core concepts.

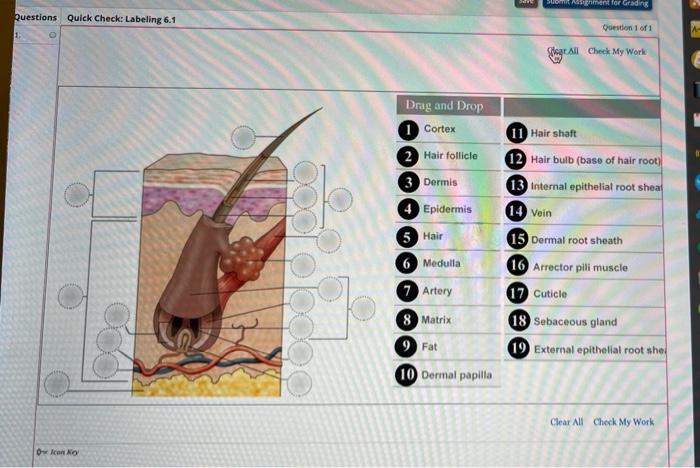

Question: Sement for Grading Questions Quick Check: Labeling 6.1 Question 1 of 1 Siege All Check My Work Drag and Drop Cortex 2 Hair follicle 3 Dermis 4 Epidermis 11 Hair shaft 12 Hair bulb (base of hair root) 13 Internal epithelial root shea 14 vein 5 Hair 6 Medulla 7 Artery 15 Dermal root sheath 16 Arrector pili muscle 17 Cuticle 18 Sebaceous gland External

On the right side, the inner 1st circle from the top is for the Cortex of Hair Shaft.

Not the question you’re looking for?

Post any question and get expert help quickly.

IMAGES

VIDEO

COMMENTS

lymph nodes. tonsil and adenoid. lymphatic Vessels. thymus. vermiform appendix. Spleen & Thymus. Bone Marrow. Inguinal Lymph Nodes. Study with Quizlet and memorize flashcards containing terms like lymph nodes, tonsil and adenoid, lymphatic Vessels and more.

Start studying Ch. 6 Labeling: Lymphatic System. Learn vocabulary, terms, and more with flashcards, games, and other study tools.

Label the structures of the upper respiratory tract. Share. Get better grades with Learn. 82% of students achieve A's after using Learn. Study with Learn. ... Assignment 6.2: Chapter 11 Respiratory System. Teacher 20 terms. quizlette9817862. Preview. Seidel Ch. 10 (lymphatic system) 22 terms. julia88w. Preview. Anatomy Cardio Vascular system.

Image annotation is a type of image labeling used to create datasets for computer vision models. You can split these datasets into training sets to train ML models and test or validate datasets before using them to evaluate model performance. Data scientists and machine learning engineers employ these datasets to train and evaluate ML models.

Motivation. Image labeling is a basic problem of variational low-level image analysis. It amounts to determining a partition of the image domain by uniquely assigning to each pixel a single element from a finite set of labels. Most applications require such decisions to be made depending on other decisions.

1.1 Motivation. at some global maximum, each of which corresponds to an image labeling that uniquely assigns the prior data. Our geo- Image Labeling is a basic problem of variational low-level metric variational approach constitutes a smooth non-convex image analysis.

View 6-1 Mastering AP Lab - Module Six Homework.docx from BIOLOGY BIO-210 at Southern New Hampshire University. 6-1 Mastering A&P Lab: Module Six Homework Art Labeling Activity: Figure 13.1 Drag the. AI Chat with PDF. Expert Help. Study Resources. ... CCJS_105_Assignment_1_David.docx. Individual Assignment 11-TM.docx.

View Assignment_6.1_Upper_Respiratory_Tract.pdf from BILLING AND CODING MOD 4 at Ross Medical Education Center. Tamesha Andrews 02/18/2021 Name:_Date:_ Assignment 6.1: Upper Respiratory Tract Label ... Label the upper respiratory tract picture below using the provided word bank. View full document.

Label the following structures by indicating which letter they correspond to in this image of the larynx: thyroid cartilage cricoid cartilage epiglottis (1/2 point per identification; 1 ½ point total) Identify the following by indicating the letter that corresponds to the structure. (1/2 point per identification; 3 points total)

View Assignment 6.1 Upper Respiratory Tract (1).pdf from MA 101D at Ross Medical Education Center. Name:_Date:_ Assignment 6.1: Upper Respiratory Tract Label the upper respiratory tract picture below ... Label the upper respiratory tract picture below using the provided word bank. ... Question 6 1 out of 1 points The key to any serious process ...

proximal convoluted tube. (4) descending nephron loop. distal convoluted tubule. collection tubule. ascending nephron loop. peritubular capillaries. Study with Quizlet and memorize flashcards containing terms like Kidney, urinary bladder, female urethra and more.

1.1 Motivation. Image Labeling is a basic problem of variational low-level image analysis. It amounts to determining a partition of the image domain by uniquely assigning to each pixel a single element from a finite set of labels. Most applications require such decisions to be made depending on other decisions. This gives rise to a global objective function whose minima correspond to favorable ...

1.1 Image Labeling 1.5 Spelling Terms 1.6 Vocabulary 1.8 Building Terms 1.9 Vocabulary 1.10 Spelling Terms 1. Chapter Homework Assessment 1. Apply Yourself: Learning lab 1. Test Yourself Week 2 9/3 - 9/9 Health and Disease Word Part Review Describe structural organization of the human body Identify body systems

View Assignment 6.1 Upper Respiratory Tract.pdf from ROSS ROW 1 at Ross Medical Education Center. Name:_Date:_ Assignment 6.1: Upper Respiratory Tract Label the upper respiratory tract picture below

Terms in this set (14) Sign up and see the remaining cards. It's free! Start studying Upper Respiratory System Labeling. Learn vocabulary, terms, and more with flashcards, games, and other study tools.

Anatomy and Physiology. Anatomy and Physiology questions and answers. RESPIRATORY SYSTEM LAB - MODEL VERSION (After labeling, submit the assignment online) Activity: Label the diagrams below by typing your label in a text box (without borders) next to each label the instructor on a model in the class room. to Diagram 1: Upper Respiratory Tract.

We propose ConfLabeling, an interactive confidence-based labeling interface (system) that represents image difficulties as user and system confidence. The proposed labeling system defines both user and system confidences to help users in image labeling. User confidence is the user's perception of each image.

View Assignment 6.1 Upper Respiratory Tract.pdf from AA 1Name:_Date:_ Assignment 6.1: Upper Respiratory Tract Label the upper respiratory tract picture below using the provided word bank. Paranasal

Image Labeling by Assignment. Freddie Åström, Stefania Petra, Bernhard Schmitzer, Christoph Schnörr. We introduce a novel geometric approach to the image labeling problem. Abstracting from specific labeling applications, a general objective function is defined on a manifold of stochastic matrices, whose elements assign prior data that are ...

Location. Term. Esophagus. Location. Sign up and see the remaining cards. It's free! Start studying Bi 233: Labeling Upper Respiratory Tract. Learn vocabulary, terms, and more with flashcards, games, and other study tools.

Unformatted text preview: Assignments to complete for a grade: 9.1 Image Labeling - 15pts Chapter 9 Pronunciation Test - 15pts Chapter 9 Learning Lab - 100pts Chapter 9 Test - 25pts Coursework Due 10 ... Cobb 2018fa BIO 112 Assignment Schedule 14 Week (1).docx. Nash Community College. BIO 112. Biology. Aplia MindTap Assignments.

Terms in this set (13) Sign up and see the remaining cards. It's free! Start studying Med Term Chapter 7 labeling respiratory system. Learn vocabulary, terms, and more with flashcards, games, and other study tools.

Upload Image. Special Symbols. ... Sement for Grading Questions Quick Check: Labeling 6.1 Question 1 of 1 Siege All Check My Work Drag and Drop Cortex 2 Hair follicle 3 Dermis 4 Epidermis 11 Hair shaft 12 Hair bulb (base of hair root) 13 Internal epithelial root shea 14 vein 5 Hair 6 Medulla 7 Artery 15 Dermal root sheath 16 Arrector pili ...